Dyalog ’19 talks begin

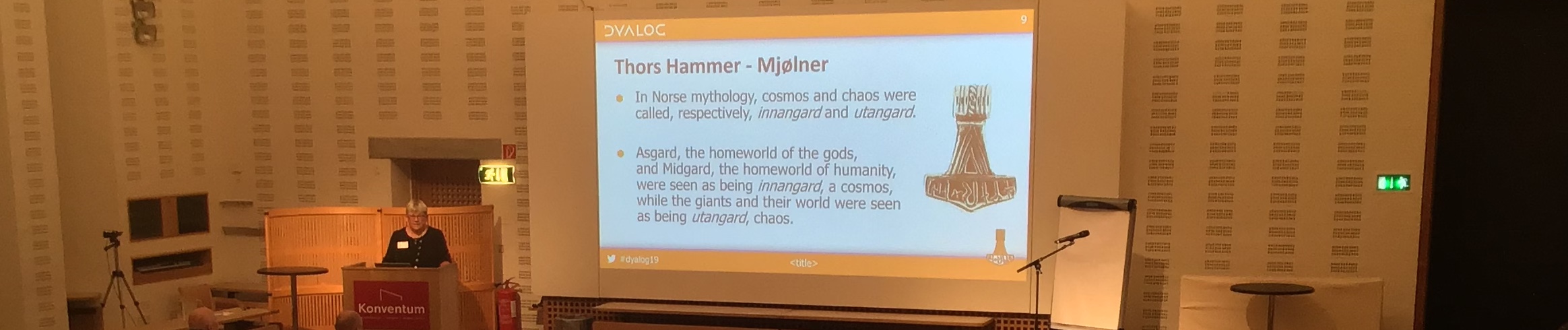

As usual, we began the series of User Meeting talks with a warm welcome from Managing Director Gitte Christensen. This year Gitte’s message felt somewhat spiritual as she described the lore of Thor’s hammer Mjölnir and its place in formal ceremonies. The choice of logo for this year’s User Meeting feels appropriate to the current zeitgeist: the desire to make some order from the chaos we may feel around us. You could feel a sense of hope in hard times.

Gitte welcomes us to Dyalog ’19

Hard and sad times have certainly befallen us recently and we remember two APLers who have sadly passed away this year. Harriett Neville who was supposed to attend the User Meeting, and John Scholes who left us in February. However, we also say hello to new faces at this User Meeting – Josh David and Nathan Rogers who were hired to form our new US consulting team.

In consideration of new APLers, Gitte also gave a call to arms that we should “spread the gospel” about APL. She expressed how Dyalog is making it easier to introduce people to APL by having unregistered copies of Dyalog available for non-commercial users in the near future, and also by encouraging people to share their APL tools freely online using services such as GitHub.

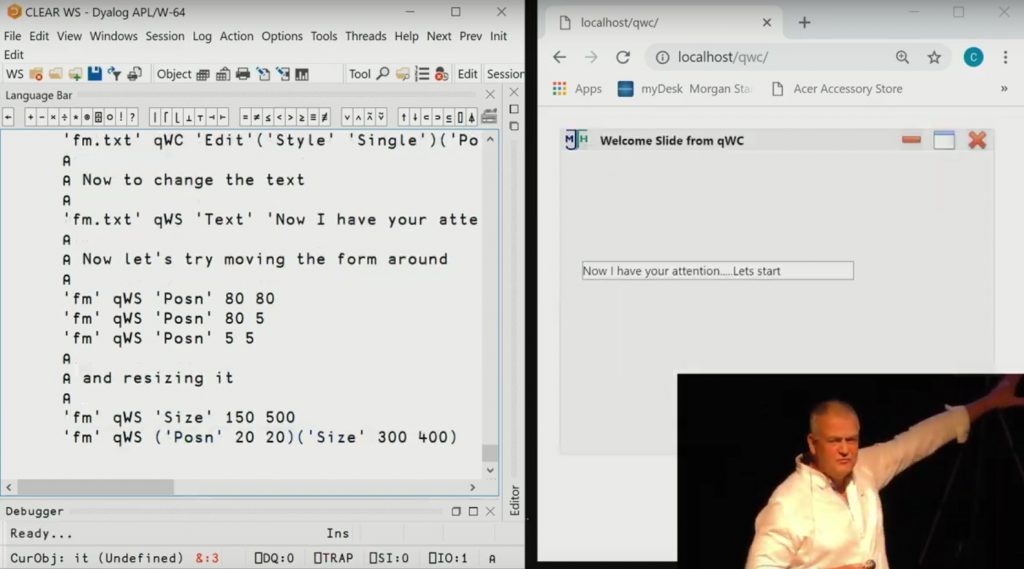

Morten shows us the road ahead

This was shortly followed by a road map of future Dyalog development from Technical Director Morten Kromberg. He also emphasised how Dyalog is making APL easier to find for new people, this time mentioning the APL Orchard Chat room; the Dyalog Webinars which have been running for about 2 years now; our talks in the wider programming community at events like LamdaConf and FunctionalConf; and of course the open source APL projects as Gitte had mentioned.

Morten also described how we have been working and continue to work to make APL applications easier to deploy, maintain, test and integrate with other frameworks and development processes.

There were no surprises in JD’s demonstration of the .NET Core Bridge. The functionality in terms of the .NET Framework remained, but JD showed us what it would look like if .NET “was as portable as they tell us it should be” and could be used on Windows, MacOS and Linux with the same APL code.

Marshall took us in a more technical direction, although still pointed towards the future of APL. He showed us the new operators constant ⍨, atop ⍤ and over ⍥ which we can look forward to in version 18.0. He also gave the suggested new nomenclature for the several types of function composition which will be available when these function-composition operators are released.

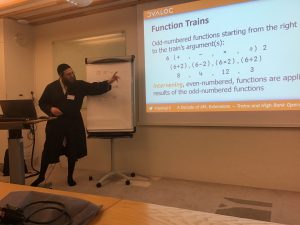

Marshall introduces new operators for Dyalog v18.0

Tommy Johannessen has been running his one-man company for some decades now, and we got to see a great user story as he demonstrated the interface to his school lunch system SkoleMad. It enables the delivery of 20,000 meals daily to 100,000 students!

Morten and Adam teamed up again to bring ]LINK to a wider audience and emphasize the importance of using text-based APL source files to modernize your APL development workflows. This is also a vital tool if you want to share your code easily with services like GitHub.

Paul Mansour demonstrates Git integration with AcreTools

The theme of modern APL development continued seamlessly as Paul Mansour of The Carlisle Group presented a Git workflow for Dyalog APL using the Acre project management system. He demonstrated using AcreTools user commands and broke down a Git workflow into something accessible even to people who are new to Git and may find it slightly daunting to use.

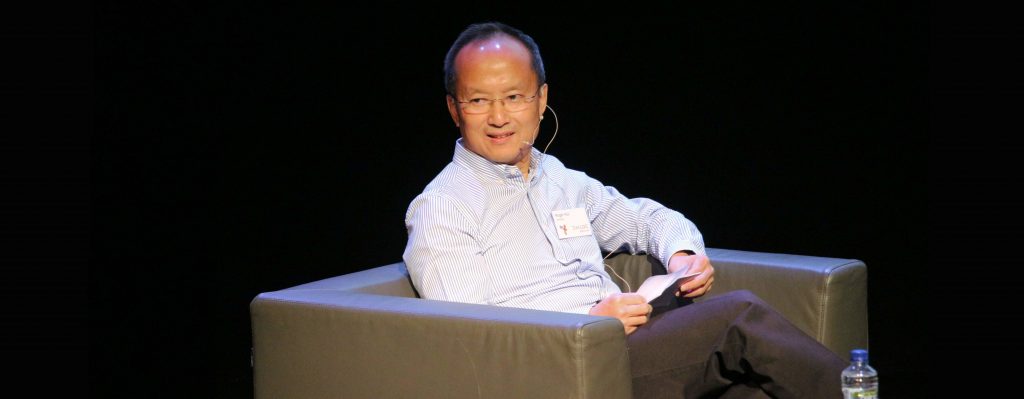

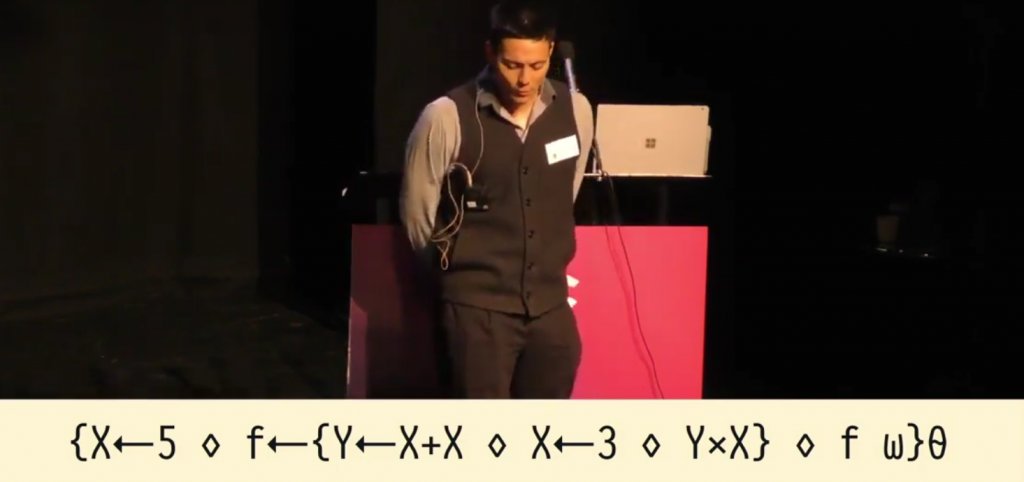

The co-dfns compiler is a staple of the Dyalog User Meetings at this point. Aaron Hsu’s PhD project allows APL code to run fast on GPUs, and this year he was clearly excited to show us some of the revelations that have come from the development of co-dfns. These revelations came in the form of some quite high level development concepts for us to chew on and there are sure to be some interesting conversations as a result.

Aaron Hsu pontificates

The future is here, the future is now and the future is cross-platform. Richard Smith brought us yet another tool in the future tool box for Dyalog: Cross-Platform Configuration Files. The project is in early development and so the majority of the talk became an interesting debate into the pros and cons of various ideas for the format. XML, JSON, YAML or another – who will win? Only time will tell…

Geoff Streeter regales and informs about shared code files

Geoff told us the story of how a request to have functions loaded on demand led him to the germ of the idea and eventual implementation of shared code files. The audience was attentively, silently listening and the air of the Damgårdsalen was that of a village gathered around listening to their elder.

Richard Smith returned to show us some datetime functions to help us find out whether or not it is yet Christmas (SPOILER: It’s not Christmas yet). His slick demonstration reassured us that handling dates and times in Dyalog can and will be as painless as the idiosynchrosies of time and calendars permit – again after some details have been worked out.

Now we are adjurning for dinner. Later this evening we will be puzzling some puzzles in the APL Team Contest, hosted by members of Liceo Scientifico GB Grassi Saronno (a scientific high school in Italy). Don’t forget to check out tomorrow’s blog post to see how things went!

Follow

Follow