We received some excellent competition entries this year. Once again, thank you to all those who participated, congratulations to this year’s winners, and thank you to the Grand Prize Winner Andrii Makukha for his presentation at this year’s user meeting.

This post contains some suggested Phase I solutions along with some comments from the judges. Before each solution there is a brief summary of the problem and a link to the full problem on the practice problems site; you can also download the full set of problem descriptions as a PDF.

This page contains spoilers. The suggested solutions are not the only correct solutions, and may not necessarily be best practice depending on your application or most optimal in terms of performance, but they do solve the problems in some particularly interesting and elegant ways. If you’d like to try to solve the problems yourself before looking at these example solutions, you can use problems.tryapl.org to check your solutions.

1: Let’s Split

The first problem was to write a function splitting a vector right argument into a nested vector of vectors according to a signed integer left argument. For example:

¯3 (your_function) 'DyalogAPL'

┌──────┬───┐

│Dyalog│APL│

└──────┴───┘

Most entrants successfully solved this problem using dyadic take ↑ and drop ↓.

{c←⍺+(⍺<0)×≢⍵ ⋄ (c↑⍵)(c↓⍵)}

It was common to use the left argument ⍺ as-is with ↑ and ↓, and swap the two parts of the result using ⌽⍣condition or condition⌽array, but the solution above avoids the swap by computing appropriate arguments to ↑ and ↓.

Try it now with TryAPL

2: Character Building

In problem 2, we asked participants to partition a vector of UTF-8 character encodings, similarly to 'UTF-8'∘⎕UCS¨, without using ⎕UCS. For example:

(your_function) 68 194 165 226 141 186 226 140 138 240 159 148 178 57

┌──┬───────┬───────────┬───────────┬───────────────┬──┐

│68│194 165│226 141 186│226 140 138│240 159 148 178│57│

└──┴───────┴───────────┴───────────┴───────────────┴──┘

Eight participants submitted this (or a variation thereof):

{⍵⊂⍨1≠128 192⍸⍵}

Instead of doing multiple comparisons, this neatly uses interval index ⍸ to check which range the argument is in. It then uses partitioned-enclose ⊂ to create partitions beginning where code points are either below 128 or above 192.

Try it now with TryAPL

3: Excel-lent Columns

Problem 3 was simply to convert Microsoft Excel-style column letters to an integer. For example:

(your_function) 'APL'

1104

Thirty-five participants submitted variations on this:

{26⊥⎕A⍳⍵}

While simple at first glance, it is actually quite involved because ⎕A⍳⍵ can give 26 (for Z) which isn’t a valid digit in base-26. However, decode ⊥ handles out-of-bounds digits by carrying.

Try it now with TryAPL

4: Take a Leap

The task for problem 4 was to write a function to verify which of an array of integer years are leap years. For example:

(your_function) 1900+10 10⍴⍳100

0 0 0 1 0 0 0 1 0 0

0 1 0 0 0 1 0 0 0 1

0 0 0 1 0 0 0 1 0 0

0 1 0 0 0 1 0 0 0 1

0 0 0 1 0 0 0 1 0 0

0 1 0 0 0 1 0 0 0 1

0 0 0 1 0 0 0 1 0 0

0 1 0 0 0 1 0 0 0 1

0 0 0 1 0 0 0 1 0 0

0 1 0 0 0 1 0 0 0 1

We had eight solutions like this one:

{0≠.=4 100 400∘.|⍵}

At first, it generates a 3-element vector showing whether the argument is divisible by 4, 100 or 400.

0=4 100 400∘.|1900

1 1 0

The cleverness then is that ≠⌿ is used to express the logic of the leap-year algorithm. From Wikipedia:

if (year is not divisible by 4) then (it is a common year)

else if (year is not divisible by 100) then (it is a leap year)

else if (year is not divisible by 400) then (it is a common year)

else (it is a leap year)

We can check this with all possible length-3 boolean arguments:

2⊥⍣¯1⍳¯1+2*3

0 0 0 1 1 1 1

0 1 1 0 0 1 1

1 0 1 0 1 0 1

≠⌿2⊥⍣¯1⍳¯1+2*3

1 1 0 1 0 0 1

Consider each case in turn:

1. Leap year, return 1

2. Can never occur

3. Not a leap year, return 0

4. Can never occur

5. Can never occur

6. Can never occur

7. Leap year, return 1

It is good because it uses no explicit loops and keeps intermediate values flat (no nesting). The solution leverages that each leap year rule is an exception to the previous one, and this particular formulation employs an unusual inner product ≠.= (equivalent to {≠/⍺=⍵} for vector arguments) to compute the parity of the divisibilities.

Try it now with TryAPL

5: Stepping in the Proper Direction

Problem 5 was to create a list generator somewhat similar to iota ⍳. However, this list generator takes a 2-element integer right argument and returns a list starting from the first integer and either increasing or decreasing in steps of 1 until the last integer inclusively. For example:

(your_function) 4 ¯3

4 3 2 1 0 ¯1 ¯2 ¯3

Only one person had this exact solution, though many solutions were not too far off:

{(⊃⍵)-0,(××⍳∘|)-/⍵}

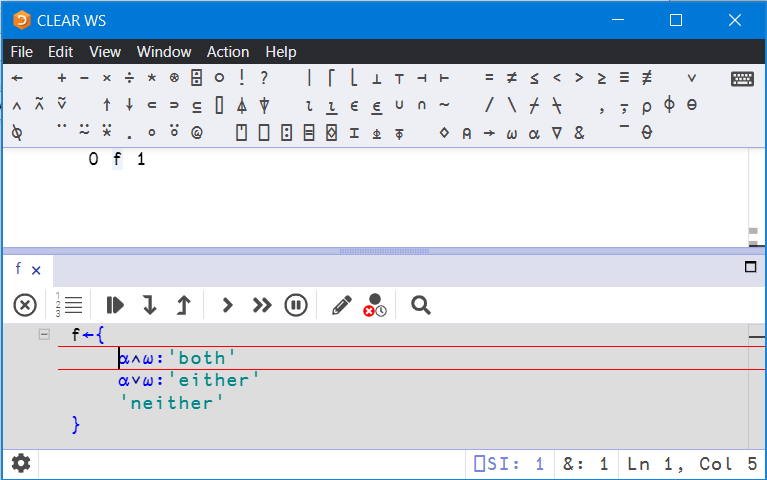

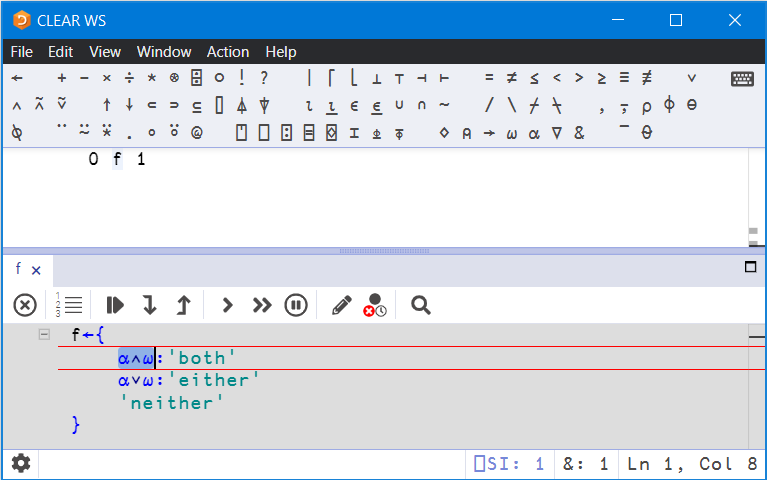

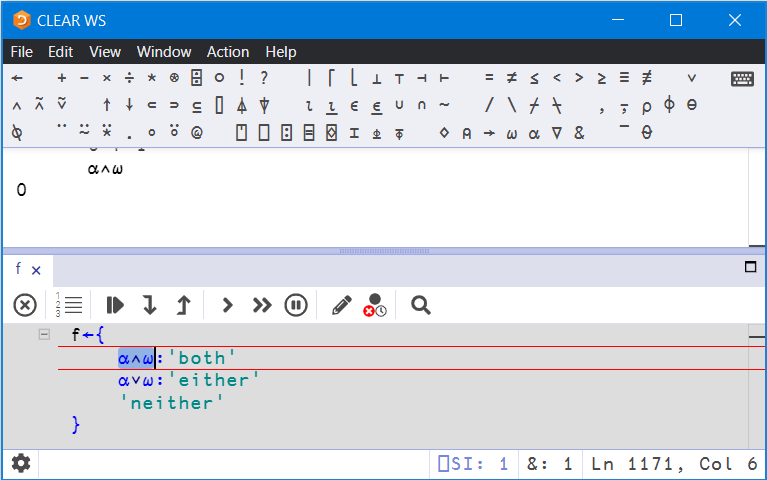

This dfn contains a 3-train or fork. Having seen contestants use the following format before, we feel compelled to provide you with a commented version of the above:

{

-/⍵ ⍝ The length of the result is 1 more than the difference

⍳∘|) ⍝ Integers up to the absolute difference

× ⍝ times

(× ⍝ The sign of the difference

0, ⍝ Make the range inclusive

- ⍝ Use arithmetic to compute the correct result

(⊃⍵) ⍝ From the first value

}

Alternatively:

{

(⊃⍵) ⍝ From the first value

- ⍝ to

0, ⍝ inclusively

(× ⍝ The sign of...

× ⍝ times

⍳∘|) ⍝ Integers in the range of...

-/⍵ ⍝ The difference

}

This one excels in only computing necessary values once, and cleverly adjusts the generated values to rise or fall as needed, using the sign of the difference between the beginning and end points of the target range.

Try it now with TryAPL

6: Move to the Front

The task for problem 6 was to move all elements in the right argument vector equal to the left argument scalar to the start of that vector. For example:

'a' (your_function) 'dyalog apl for all'

aaadylog pl for ll

Only one participant found this train, though two others submitted dfns using the same idea:

∩⍨,~⍨

Instead of computing indices or selecting elements, this simply employs two set functions, intersection ∩ and without ~. The asymmetry of intersection, namely that it preserves duplicates from its left argument, is here used to great advantage.

Try it now with TryAPL

7: See You in a Bit

Problem 7 involved writing a function to compare set bits in the base-2 representations of its integer arguments. For example:

2 (your_function) 7 ⍝ is 2 in 7 (1+2+4)?

1

Eleven solutions used this method:

∧/(≤/2⊥⍣¯1,)

Indeed, the problem is about finding particular set bits in a binary number, hence the 2⊥⍣¯1. The overall function is a 2-train or atop, where the right-hand function is itself a 3-train or fork.

We can break it down as follows:

∧/ ⍝ Are all of (0 if any are *not*)

(≤/ ⍝ Set left bits also set in right

2⊥⍣¯1 ⍝ in The base-2 representation of

,) ⍝ The left and right arguments?

The function less than or equal to ≤ only returns 0 where a left bit is not found in the right argument:

5((⊢,⍥⊂⍪⍤(≤/))2⊥⍣¯1,)9 ⍝ Just a fancy way of visualising the intermediate and final result

┌───┬─┐

│0 1│1│

│1 0│0│

│0 0│1│

│1 1│1│

└───┴─┘

This is pretty impressive, as it both demonstrates array orientation (in treating both arguments together) and uses Dyalog APL’s fancy inverse operator ⍣¯1 to use as many bits as necessary, while keeping the two representations aligned.

Try it now with TryAPL

8: Zigzag Numbers

The solution to problem 8 returns a 1 if its integer argument’s digits consecutively rise and fall throughout. For example, 12121 is a zigzag number, but 1221 is not.

We saw a handful of solutions of this type:

∧/0>2×/2-/10∘⊥⍣¯1

We can decompose it like so:

∧/ ⍝ Are all

0> ⍝ Negative for...

2×/ ⍝ Consecutively different signs of...

2-/ ⍝ The pairwise difference of...

10∘⊥⍣¯1 ⍝ The digits of the input?

It constitutes a good example of how the pattern in trains often is a natural one (this is actually an 8-train), and also shows off two uses of pairwise application 2f/ to compute the pairwise difference (the sign of which indicates the direction from digit to digit) and then the pairwise product (which due to the rules for multiplication of signed numbers indicates if a change has happened or not).

Try it now with TryAPL

9: Rise and Fall

Problem 9 involved writing a function to verify if a numeric vector has two properties:

- The elements increase or stay the same until the “apex” (highest value) is reached

- After the apex, any remaining values decrease or remain the same

For example:

(your_solution)¨(1 2 2 3 1)(1 2 3 2 1)(1 3 2 3 1)

1 1 0

Actually, nobody had this exact solution, however, a handful came very close:

⊢≡⌈\⌊∘⌽⌈\∘⌽

Instead of trying to analyse the numbers, it does a running maximum from the left and from the right. If the minimum of those matches the original numbers, then we have exactly one peak.

⊢ ⍝ The input vector

≡ ⍝ matches

⌈\ ⍝ The max-scan from the left (msl)

⌊∘⌽ ⍝ The lower of msl and ⌽msr

⌈\∘⌽ ⍝ The max-scan from the right (msr)

We can visualise ⌊∘⌽ by stacking its arguments on top of one another:

1 3 5,[0.5]⌽2 5 4

1 3 5

4 5 2

⌊⌿1 3 5,[0.5]⌽2 5 4

1 3 2

1 3 5⌊∘⌽2 5 4

1 3 2

When used with the two max-scans, we can see how this solution works.

(⌈\,[0.5]∘⌽⌈\∘⌽)1 3 2

1 3 3

3 3 2

(⌈\,[0.5]∘⌽⌈\∘⌽)1 0 2

1 1 2

2 2 2

Try it now with TryAPL

10: Stacking It Up

The task for problem 10 was to format a nested vector of simple arrays as if displayed using {⎕←⍵}¨, and then to return the formatted character matrix ({⎕←⍵}¨ simply returns its argument). For example:

(your_function) (3 3⍴⍳9)(↑'Adam' 'Michael')(⍳10) '*'(5 5⍴⍳25)

1 2 3

4 5 6

7 8 9

Adam

Michael

1 2 3 4 5 6 7 8 9 10

*

1 2 3 4 5

6 7 8 9 10

11 12 13 14 15

16 17 18 19 20

21 22 23 24 25

We had a couple of entries like this:

{¯1↓∊(⍕¨⍵),¨⎕UCS 13}

This was a tricky problem, especially as the automated testing didn’t include the 'a'1 test case, and many didn’t catch that one. Whilst most people wrote complicated code to get matrices for the element arrays, two participants thought outside the box, and simply joined the arrays with newlines after converting them to text.

Try it now with TryAPL

Conclusion

As always, not only are we deeply impressed by the ingenuity and cleverness of your submissions, but we also continue to be amazed by the number of people successfully solving most, if not all, of the problems in Phase I.

If you’d like to be notified when next year’s competition launches, go to dyalogaplcompetition.com and submit your email address.

The story of how Rodrigo got his first internship at Dyalog is, in his opinion, a textbook example of serendipity. As 2020 started, Rodrigo began actively participating in an online code golf community, where people try to solve programming challenges in as few bytes of code as possible. Whilst his golfing skills were possibly lacking, the challenges he posted were usually well accepted. Posting many challenges meant Rodrigo got exposed to answers in all sorts of programming languages, from C, Java, Python and JavaScript, to Jelly, 05AB1E, Husk…and APL. Because of the context and the aspect of it, Rodrigo first thought APL was one of those “esolangs” and not a serious programming language.

The story of how Rodrigo got his first internship at Dyalog is, in his opinion, a textbook example of serendipity. As 2020 started, Rodrigo began actively participating in an online code golf community, where people try to solve programming challenges in as few bytes of code as possible. Whilst his golfing skills were possibly lacking, the challenges he posted were usually well accepted. Posting many challenges meant Rodrigo got exposed to answers in all sorts of programming languages, from C, Java, Python and JavaScript, to Jelly, 05AB1E, Husk…and APL. Because of the context and the aspect of it, Rodrigo first thought APL was one of those “esolangs” and not a serious programming language.

Follow

Follow Shuhao joined Dyalog straight after he completed his Master’s degree in quantum computing, which happened to be during the second COVID-related lockdown in the UK. This has given him a quite unusual experience of starting a career as he has yet to meet a single member of the Dyalog team face to face! Shuhao obtained his Bachelor’s degree in mathematics – although he was interested in computer science, he studied mathematics as a way of looking for the root of CS and computing. He has broad interests across different software including Matlab, Python and LaTeX and has developed a solid knowledge on C++.

Shuhao joined Dyalog straight after he completed his Master’s degree in quantum computing, which happened to be during the second COVID-related lockdown in the UK. This has given him a quite unusual experience of starting a career as he has yet to meet a single member of the Dyalog team face to face! Shuhao obtained his Bachelor’s degree in mathematics – although he was interested in computer science, he studied mathematics as a way of looking for the root of CS and computing. He has broad interests across different software including Matlab, Python and LaTeX and has developed a solid knowledge on C++.

We are happy to announce that the full set of recordings from Dyalog ’20 online is now available. So if you missed the all or any of the talks, or would like to revisit one of the presentations, head over to

We are happy to announce that the full set of recordings from Dyalog ’20 online is now available. So if you missed the all or any of the talks, or would like to revisit one of the presentations, head over to