Welcome to the second week of recordings from Dyalog ’19 in Elsinore! This week we are featuring three presentations about work that is either already available, or will appear in Dyalog version 18.0 in 2020.

All three talks are by members of the Dyalog development team, but Adám Brudzewsky’s talk on APLcart is labelled U14 because the bulk of the work has been done in his own time. APLcart is a novel way of making it easy to find information about how to do things in APL, as opposed to finding documentation for a particular feature of the language or development environment. Although it is brand new, APLcart is already a very effective tool. I recently had the pleasure of teaching an introductory APL course where I told the students about APLcart on the first day. By the end of the week, every time the students had to solve an exercise, they headed straight for APLcart. Please check it out, try to find things, and let Adám know about anything that you can’t find so he can add it!

One of our most important goals is to make Dyalog APL as capable – and as similar – as possible on all significant computing platforms, ideally allowing you to develop on any supported platform and deploy the solution to all others. One of the remaining hurdles is configuration of the interpreter, the development environment – and applications. This is currently done very differently from one platform to the next. Richard Smith introduces a project he is working on that aims to provide a single format for configuration files across all platforms. The choice of a file format is not an easy one, and a significant part of this recording consists of comments from the audience – including members of the Dyalog development team – voicing a variety of opinions about this.

Finally, a delicacy for language geeks: The word tacit means “unspoken”. Tacit programming involves expressions consisting only of functions, operators and constants; the arguments are implied. For example, the expression (+⌿ ÷ ≢) is a function train, which should be read “sum divided by count” – without speaking about what the functions are applied to. The corresponding “explicit” expression would be {(+⌿⍵) ÷ ≢⍵} which mentions the argument twice. In his talk on Tacit Techniques, Marshall explains how tacit programming can be elegant and powerful, and why a set of new operators planned for Dyalog version 18.0 will be particularly useful for tacit programming. His talk includes a neat way of ranking poker hands with the over (⍥) operator, which will be available in Dyalog version 18.0.

Summary of this week’s videos:

- U14: APLcart: A Novel Approach to Finding Your Way in APL (Adám Brudzewsky)

- D06: Cross-Platform Configuration Files (Richard Smith)

- D04: Tacit Techniques with Dyalog version 18.0 Operators (Marshall Lochbaum)

Join us again next week for another three recordings from Dyalog ’19.

Follow

Follow

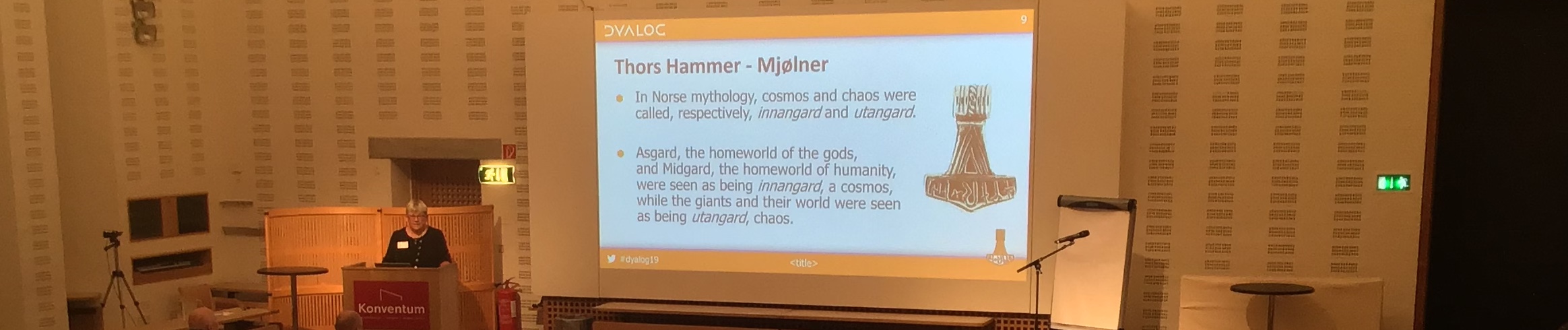

2019 is the first “Year of the Hammer”: after seven years of Norse wyrms and seven years of Viking ships, we were now entering the era of seven hammer-inspired logos. As Gitte explains in her talk, we are celebrating the first year under Thor’s Hammer by making Dyalog APL freely available for non-commercial use – without requiring registration – under Microsoft Windows, Apple macOS and GNU/Linux (including a collection of public Docker images). The intention is to make APL much more easily accessible for experiments – especially in the cloud!

2019 is the first “Year of the Hammer”: after seven years of Norse wyrms and seven years of Viking ships, we were now entering the era of seven hammer-inspired logos. As Gitte explains in her talk, we are celebrating the first year under Thor’s Hammer by making Dyalog APL freely available for non-commercial use – without requiring registration – under Microsoft Windows, Apple macOS and GNU/Linux (including a collection of public Docker images). The intention is to make APL much more easily accessible for experiments – especially in the cloud! After last year’s Technical Road Map, which was almost entirely a live demonstration of using APL with modern development tools like Git, VS Code and Docker, I decided to play it safe this year and do no demos at all in my keynote. Instead, I concentrated on explaining some of our thoughts about making Dyalog APL easier to discover, learn and integrate into modern frameworks and development processes – and making applications written in APL easier to deploy and maintain. As a result, despite the world premiere of our new Webinar Jingle, composed by Stefano Lanzavecchia (

After last year’s Technical Road Map, which was almost entirely a live demonstration of using APL with modern development tools like Git, VS Code and Docker, I decided to play it safe this year and do no demos at all in my keynote. Instead, I concentrated on explaining some of our thoughts about making Dyalog APL easier to discover, learn and integrate into modern frameworks and development processes – and making applications written in APL easier to deploy and maintain. As a result, despite the world premiere of our new Webinar Jingle, composed by Stefano Lanzavecchia ( The title of John’s talk was “Cor(e) Blimey!”. The Cor(e) is of course a reference to Microsoft’s “.NET Core” but if English is not your first language, the title of John’s talk may need a little explanation. “Cor blimey” is an exclamation of surprise, a euphemism derived from “God Blind Me”. In this talk, John explains how Dyalog is poised to provide a bridge to Microsoft’s new portable, open source version of .NET. Scheduled for release with Dyalog version 18.0 next year, this will provide APL users with access to a vast collection of libraries under Linux and macOS, in addition to Windows.

The title of John’s talk was “Cor(e) Blimey!”. The Cor(e) is of course a reference to Microsoft’s “.NET Core” but if English is not your first language, the title of John’s talk may need a little explanation. “Cor blimey” is an exclamation of surprise, a euphemism derived from “God Blind Me”. In this talk, John explains how Dyalog is poised to provide a bridge to Microsoft’s new portable, open source version of .NET. Scheduled for release with Dyalog version 18.0 next year, this will provide APL users with access to a vast collection of libraries under Linux and macOS, in addition to Windows.